Table of Contents

Testnode Access

Summary

We have about 250 baremetal testnodes that get automatically reserved (locked) and unlocked by teuthology “workers.” Workers are daemons on the teuthology.front.sepia.ceph.com VM that are fed jobs via a beanstalk queue. This page will cover setting up your workstation to lock and unlock testnodes as well as schedule teuthology suites.

Baremetal testnodes get OSes automatically “installed” using FOG when you lock them either via teuthology-lock or teuthology-suite.

Teuthology Config

Most developers schedule suites from the teuthology.front.sepia.ceph.com VM which automatically locks/unlocks machines.

However, if you wish to run teuthology commands from your workstation, see https://docs.ceph.com/projects/teuthology/en/latest/INSTALL.html#installation-and-setup.

Once you've got teuthology added to your workstation path, make sure you copy the current /etc/teuthology.yaml from teuthology.front.sepia.ceph.com to your local workstation's ~/.teuthology.yaml.

SSH Config

Baremetal testnodes get reprovisioned with an already-configured OS image including a home dir for your user account. Your ssh public key should be in your user's and the /home/ubuntu/.ssh/authorized_keys file on each testnode. You should always SSH as your username.

Example

ssh -i ~/.ssh/id_rsa YOURUSER@testnode123.front.sepia.ceph.com

You should replace ~/.ssh/id_rsa with whatever private key corresponds with your private key listed here

To avoid interfering with other contributors' tests, you should refrain from logging into hosts that aren't locked by you via teuthology-lock.

If you run into any issues with a testnode that appear to be OS, network, or environment relatated (in other words: not ceph/test related), please file a ticket.

Unless you have a separate public/private key pair on the teuthology machine, you'll want to use SSH agent forwarding when SSH'ing to teuthology.front.sepia.ceph.com. You can do this either by using ssh -A USER@teuthology.front.sepia.ceph.com or adding the following to your workstation's ~/.ssh/config:

host teuthology.front.sepia.ceph.com

User $YOURUSER

IdentityFile ~/.ssh/id_rsa # (This should be the private key matching the public key you provided in your user access ticket)

ForwardAgent yes # <- This is the important part

host smithi* mira* gibba*

StrictHostKeyChecking no

UserKnownHostsFile=/dev/null

This will allow you to SSH from your workstation → teuthology machine → all testnodes

Your SSH config on teuthology.front.sepia.ceph.com should have this:

Host * StrictHostKeyChecking no UserKnownHostsFile=/dev/null

VPSes

VPS (Virtual Private Servers) are ephemeral KVM virtual machines that are spun up on demand using, for example:

teuthology-lock --lock-many 1 --machine-type vps --os-type ubuntu --os-version 14.04

More Information

Their hostnames are vpm{001..200}.front.sepia.ceph.com. A few mira are set aside as hypervisors. See vpshosts.

You must create an RSA SSH keypair (named id_rsa/id_rsa.pub) to ssh to VPSes from the teuthology host. We also recommend you have us add the public key to your pubkey file in the keys repo.

Note: You still need a keypair even when using SSH agent forwarding from your workstation.

The following ~/.ssh/config is required for you to lock VPSes from the teuthology host.

Host * StrictHostKeyChecking no UserKnownHostsFile /dev/null Host vpm* User ubuntu

Troubleshooting

SSHException: Error reading SSH protocol banner

teuthology.exceptions.MaxWhileTries: reached maximum tries (100) after waiting for 600 seconds

- If you're using a static key file (as in you have a

~/.ssh/id_rsafile) onteuthology.front.sepia.ceph.com, make sure its permissions are0600 - The SSH key can NOT have a passphrase (unless you're doing SSH Agent Forwarding?)

- The SSH key can NOT have been generated using OpenSSH version >= 7.8p1-1 (

[dpkg -l|rpm -qa] | grep opensshto find out)- Either generate your SSH key from

teuthology.front.sepia.ceph.comor tryssh-keygen -t rsa -m PEM

rm -f ~/.ssh/known_hostsand addUserKnownHostsFile /dev/nullto your SSH config.- Ask Adam Kraitman or Dan Mick to capture new FOG images that include your public SSH key.

- You must have

ForwardAgent yesset forteuthology.front.sepia.ceph.comin your workstation's~/.ssh/configfile.

The newest version of paramiko doesn't support SSH keys that have BEGIN OPENSSH PRIVATE KEY in them. See https://github.com/paramiko/paramiko/issues/1015.

Out-Of-Band Management

Further reading

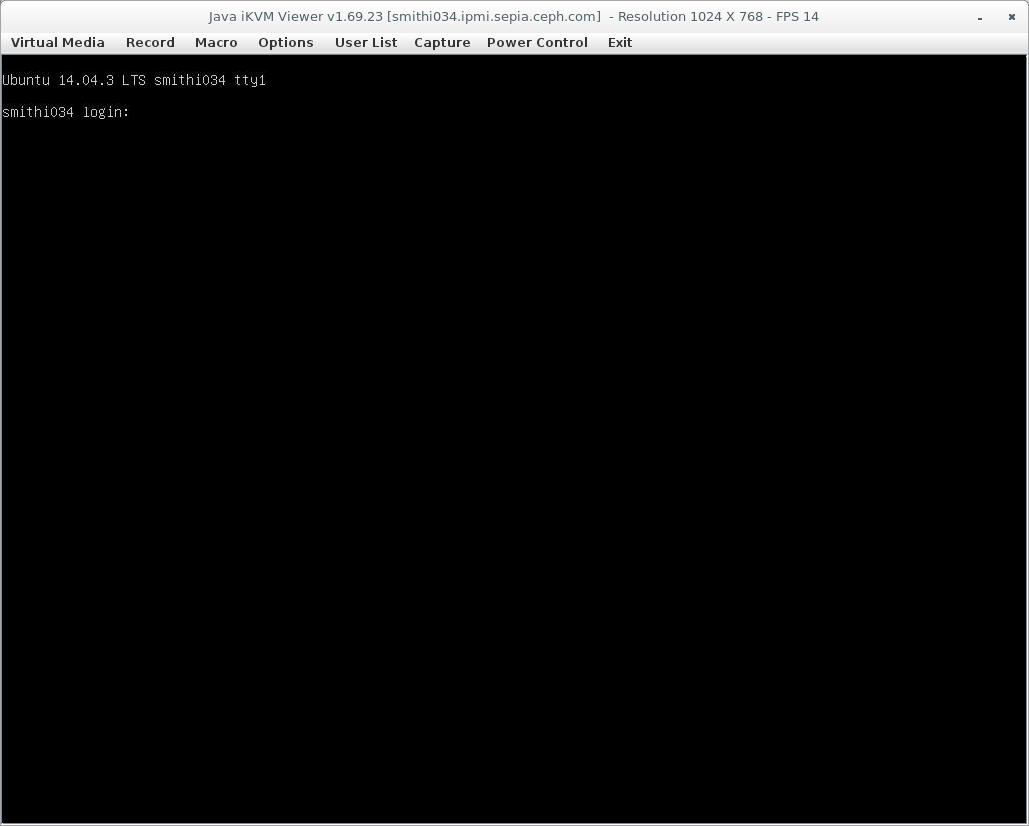

IPMI

Baremetal testnodes are accessible via out-of-band (OOB) management controllers, or BMCs. If you're unable to reach a host via ssh on its front.sepia.ceph.com address, you can try accessing it using conserver and power cycle via ipmitool.

Power Cycle Example

ipmitool -I lanplus -U inktank -P XXXXX -H testnode123.ipmi.sepia.ceph.com chassis power cycle

Note the 'ipmi' vs 'front' in the FQDN

Other common IPMI power commands include:

chassis power on chassis power off chassis power status